Kubernetes Sandbox

How to expose the underlying Kubernetes layer of your clusters from the Orka CLI.

Archived

This page is archived and might be outdated. See Tapping into Kubernetes instead.

This page discusses Kubernetes workflows in the Orka CLI. For more information about workflows with the Orka API, see Orka API Reference: Nodes and Orka API Reference: Kube-Accounts.

On this page, you will learn how to:

- Create a Kubernetes Account.

- Perform a few commands to validate the connection.

- Deploy a service to all nodes and look at the load balancer behavior.

Quick command summary

orka kube create

export KUBECONFIG=$(PWD)/kubeconfig-orka

kubectl [version / get pods]

kubectl create -f deploy.yaml [deploy.yaml must exist on your path]

kubectl get [pods / services]

kubectl describe pods | grep Node

Configure your K8s account

To work with the exposed Kubernetes, you need a K8s service account.

- Create a user account.

orka kube create

- Double-check the

.kubeconfig-orkafile. The server default is:https://10.10.10.99:6443

You need to manually set all customizations to the master(s) IP addresses.

Basic K8s workflow

- Ensure the

.kubeconfig-orkafile is being used to interface with the Kubernetnes API server.

export KUBECONFIG=$(PWD)/kubeconfig-orka

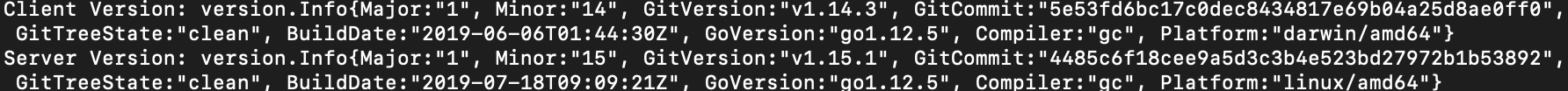

- Run a basic proof of functionality. This returns the version of the

kubectlyou downloaded.

kubectl version

Failure at this step indicates a bad binary. Success resembles:

Note the "Client Version" is the kubectl you have downloaded locally. It can differ from the "Server Version" by up to 2 minor releases (so in this instance, a 1.13 would function as well). A non-response from "Server Version" indicates you cannot connect to the cluster.

First thing to check if this gives an error: echo $KUBECONFIG and make sure it points to the kubeconfig-orka that was just created.

- Get a list of the pods on the node. Returns none if nothing has been launched in the sandbox namespace.

kubectl get pods

- Run a test deployment.

kubectl create -f hello-kubernetes.yaml

Sample contents for a deployment.

# hello-kubernetes.yaml

apiVersion: v1

kind: Service

metadata:

name: hello-kubernetes

spec:

externalIPs:

- 10.10.12.5

type: LoadBalancer

ports:

- port: 80

targetPort: 8080

selector:

app: hello-kubernetes

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: hello-kubernetes

spec:

replicas: 3

selector:

matchLabels:

app: hello-kubernetes

template:

metadata:

labels:

app: hello-kubernetes

spec:

containers:

- name: hello-kubernetes

image: paulbouwer/hello-kubernetes:1.5

ports:

- containerPort: 8080

securityContext:

runAsUser: 1000

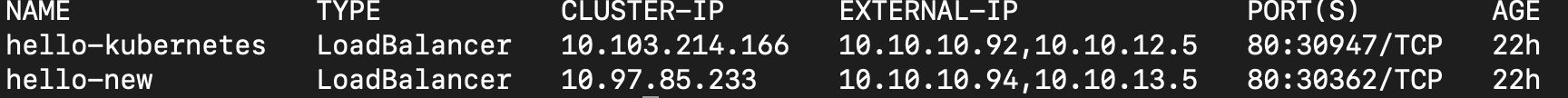

- Verify that the deployment was successful.

kubectl get pods

kubectl get services

This should show three pods and valid IP addresses, such as:

In this case, the MetalLB allows access to 10.10.10.x, not 10.10.1x.x. So, MetalLB assigned 10.10.10.92, similarly for the extra hello-new services (10.10.10.94). Viewing that assigned page in a browser will result in the "Hello-Kubernetes" image.

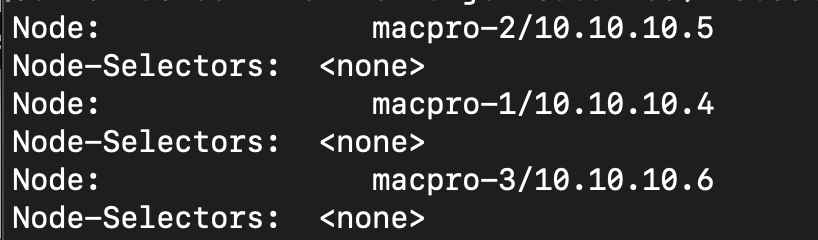

- To ensure the deployment is acting properly, perform a describe with grep for Node.

kubectl describe pods | grep Node

The following is the expected output for this deployment on a standard PoC cluster - given 3 replicas in the deployment, one pod should deploy per node.

- Use VNC to view, and change ExternalIP as desired. Services and endpoints to do port mapping is the most common use of a Kubernetes deployment.

Using Helm

A lead node is usually assigned as your point of contact for kubectl and Helm, generally 10.10.10.4.

Helm should operated with local Tiller running, using Role-Based Access Control. Orka users cannot create Kubernetes Roles or RoleBindings at this time, so a ticket must be opened to initiate this. (Tickets can be opened in your Macstadium account.)

Updated almost 2 years ago