Orka on AWS: Getting Started

With Orka Cluster on AWS, you can now effortlessly integrate macOS development and macOS CI/CD into your AWS workflows and environments.

Orka is a virtualization layer for Mac build infrastructures based on Kubernetes and OCI technology. Orka lets you orchestrate macOS in a cloud environment using Kubernetes on genuine Apple hardware.

With Orka (also referred to as Orka Cluster) on AWS, you can now effortlessly integrate macOS development and macOS CI/CD into your AWS workflows and environments.

How does Orka on AWS work?

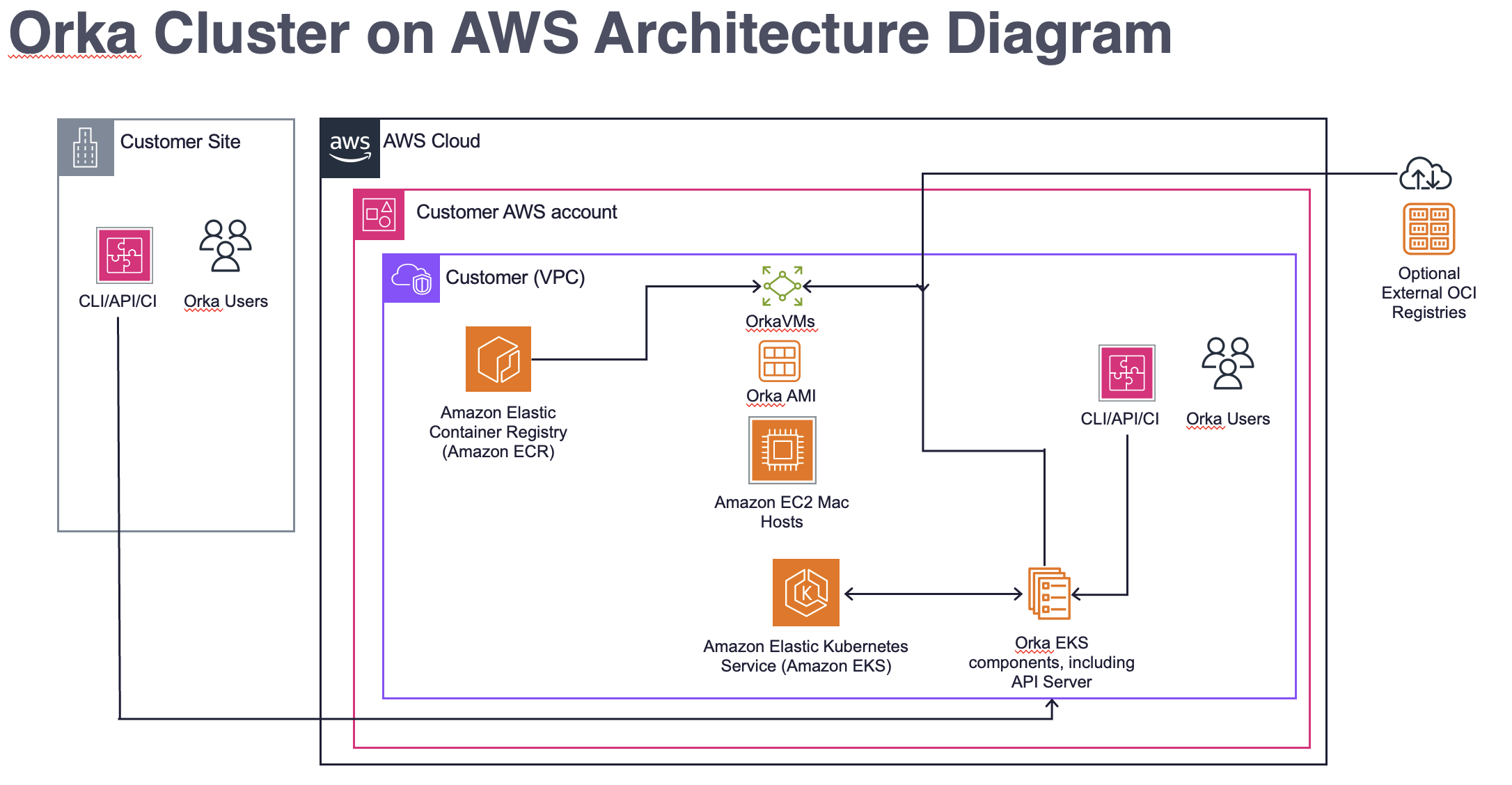

The diagram below illustrates the architecture of an Orka Cluster on AWS, detailing how it integrates with Amazon EC2 Mac instances, Amazon EKS (Elastic Kubernetes Service), and Amazon ECR (Elastic Container Registry) within a customer’s AWS account.

The EC2 Mac hosts are set up with Orka AMIs, which provide a stable runtime for virtual machines. Each VM is deployed on the host using an OCI image, which can be fetched from Amazon ECR or an external OCI Registry. The use of OCI images enables rapid deployment (within a few minutes) of different macOS versions, pre-configured with various tools and optionally with SIP (System Integrity Protection) disabled. This addresses challenges that typically exist on Mac EC2 without Orka VMs. An EKS cluster will integrate with CI tools, CLI, or API, and orchestrate workloads, including spin-up and tear-down of VMs, and scheduled caching of images as needed.

Key elements in the architecture include:

- Customer's AWS Account, which houses all the AWS Cloud components for the Customer. The Orka Cluster is software that is installed into various AWS Cloud components, like EKS, EC2 Mac, and ECR.

- Customer's VPC containing Amazon Mac EC2 instances, which run the Orka AMI as the base environment for Orka VMs. Orka VMs dynamically launch workloads as OCI Images from ECR or an external OCI Registry.

- An EKS, which runs an Amazon EKS cluster managing Orka EKS components for orchestration and automation.

- Amazon ECR serves as a container registry within AWS. Or an external OCI Registry, such as GitHub Container Registry, Docker Registry.

- Orka Users interacting with the system via CLI, API, or CI tools.

Considerations for Deployment in AWS

- EKS Deployment

- Orka must be deployed in a dedicated EKS cluster running v1.30. Orka limits certain cluster operations (such as what namespaces can be created and what pods can be deployed), and user management is restrictive. We will use a set of Ansible scripts (a common deployment method we follow, independent of the deployment location). For AWS, we will provide an image with the required playbooks & roles. It will be flexible and easy to parameterize, customize, and troubleshoot in case something goes wrong.

- Storage

- We recommend using ECR or alternative OCI repositories for image storage.

- Mac EC2

- We will provide an AMI based on an official AWS macOS base image that includes our tooling (Virtual Kubelet, Orka Engine) and a bootstrap script that accepts the EKS parameters to connect as an Orka worker node.

- Networking

- Apple Silicon nodes don’t have a direct tie-in to the traditional k8s networking stack. With Orka, we provide a private network, expose certain ports, and require NATing for access. We do provide modes for network isolation and internet isolation. We provide documentation below for how to expose Orka services outside of the cluster. A future release will include support for a bridge networking mode, which should enable the ability to get an IP on a subnet in your VPC.

- User Management and Authorization

- Users need to register at portal.mactadium.com, as all user management is handled through the portal service. A future release will allow customers to use their own OIDC provider or Authentication mechanism.

- Logging, Monitoring, and Alerting

- We provide documentation below on how to centralize logging into CloudWatch, consume metrics, and configure alerts via OpenTelemetry or CloudWatch.

- Backups

- We will provide an S3-compatible backup target and best practices on what to backup.

- API Server SSL

- We will provide instructions for putting an AWS load balancer in front of the Orka API and configuring SSL Certificates.

Networking and VPC Considerations

We recommend that you deploy the Orka services to a distinct VPC and implement your networking via VPC peering. This will allow the necessary networking to interact with VMs and the control plane. Within the VPC, the following are ports that should be exposed for different services.

- EKS running v.1.30

- Orka Operator: TCP 8080 (metrics), TCP 8081 (health check), TCP 443 (webhook), Linux worker nodes should be accessible from within the cluster on any port. Does not require Internet access.

- Orka OIDC Provider: TCP 443. Requires connectivity to the authentication provider.

- EC2 Mac Nodes

- Virtual Kubelet / Orka Engine AMI: Ingress ports can be internal to the cluster network. The customer should allow ingress to all ports within the network. The following ports should be opene to all networks that need access to the VMs: TCP 5900-5912 (Screenshare), TCP 5999-6011 (VNC), and TCP 8822-8834 (SSH). In general, we recommend allowing outbound requests uniformly for forward compatibility.

Install Overview

- Talk to your MacStadium Account Team about your Orka on AWS install. MacStadium will create an internal service ID for you, and will provide you with the details you need to connect to our Public ECR repo.

- Follow the installation steps below for the EKS Cluster and the CodeBuild role.

- Take note of the EKS Node IAM role and the CodeBuild role ARN, and share the 2 ARNs with your MacStadium Account Team

- MacStadium provides customers with an OIDC Client ID to use during CodeBuild execution and with AMI details so they can install Orka software onto EC2 Mac.

- Follow the steps below to update your build spec with the OIDC Client ID, set up IAM roles so that CodeBuild can manage the EKS cluster, and execute CodeBuild to install Orka Services into the EKS cluster.

- Follow the steps below to set up the OIDC Provider

- Follow the steps below to expose the Orka API service via Load Balancer

- Follow the steps below to configure EC2 Macs to be able to interface with the EKS cluster and install Orka software via AMI.

EKS Install Steps

- Orka must be deployed in a dedicated EKS cluster for running v1.30.

- Orka limits certain cluster operations (such as what namespaces can be created and what pods can be deployed), and user management is restrictive.

- To set up the cluster, follow the AWS guidelines for EKS Auto Mode or the EKS QuickStart.

- Recommendations:

- Choose the same region for the cluster as the one used for deploying the EC2 Mac nodes to avoid costly cross-region traffic.

- Deploy the cluster in private subnets only, as none of the Orka services need to be accessed from the Internet directly.

- Deploy at least two Linux worker nodes for resiliency and high availability.

- Note down the ARN of the EKS Node IAM role. This ARN is required by MacStadium to access the Orka service images.

- Optional:

- Set Cluster endpoint access to “Private” to restrict access to your cluster API from the Internet.

- This setting depends on your access needs. All Orka clients (CLI, integrations, etc.) must have connectivity to the cluster.

- Use EKS API for Cluster authentication mode.

- This is the newest authentication mode for EKS, replacing the old aws-auth config map.

- Set Cluster endpoint access to “Private” to restrict access to your cluster API from the Internet.

- Orka Cluster installs itself into EKS using Ansible scripts. This may change in the future, but for the time being, you will need to supply MacStadium Support team with an ARN for the role that has permission to pull an image containing the required Ansible playbooks and roles to install Orka Cluster into EKS.

- Once you provide the ARN, MacStadium support will provide access to the image that contains the ECR Repository URI and an OIDC Client ID

- Additional considerations

- The Ansible runner must use the credentials of the role provided to MacStadium to pull the Ansible image.

- The Ansible runner must have connectivity to the cluster API.

- The Ansible runner must have Cluster Admin privileges to set up the cluster.

- MacStadium recommends using CodeBuild to run Ansible and configure the EKS cluster. CodeBuild provides direct visibility to the cluster, alleviating networking concerns.

Setup a CodeBuild project to run Orka Installation into the EKS Cluster

MacStadium recommends using CodeBuild to run Ansible and configure the EKS cluster. CodeBuild provides direct visibility to the cluster, alleviating networking concerns. You will need to supply MacStadium with the ARN for the role that has permission to pull the image. To set up a CodeBuild project as an Ansible runner:

- Allow AWS to create the CodeBuild role for you. Note down the name and ARN of the role, as you will need to share it with MacStadium and modify it later.

- Select the following options:

- Project type - Default project

- Source - no source

- Environment

- Provisioning model - on-demand

- Environment image - custom type

- Compute - EC2

- Environment type - Linux Container

- Image registry - Amazon ECR

- ECR account - other ECR account

- Under Amazon ECR repository URI, enter the image provided by MacStadium.

- Allow AWS to create the CodeBuild role for you.

- Note down the name of the role, as you will need to modify it later.

- (Optional) Set VPC, Subnets and security group to be used by CodeBuild. This is only needed if the EKS access is set to private. To do that:

- Click Additional Configuration

- Select the VPC where your cluster is deployed

- Select the subnets which EKS uses

- Select a security group that has access to the EKS API

- In the BuildSpec, add the following commands:

Where:- aws eks update-kubeconfig --name {cluster_name} --region {region} - ansible-playbook /ansible/site.yml -e "k8s_api_address={k8s_api_address}" -e "kube_oidc_client_id={kube_oidc_client_id}"

{cluster_name} - the name of your EKS cluster

{region} - the region where the cluster is deployed

{k8s_api_address} - the K8s API address of your cluster

{kube_oidc_client_id} - the OIDC client ID provided by MacStadium - Save the configuration.

- Next, Configure CodeBuild to access the Ansible image provided by MacStadium and to manage the EKS cluster:

- Access the Ansible image provided by MacStadium.

- Provide the ARN of the CodeBuild role configured in the previous step to MacStadium to allow access.

- Manage the EKS cluster. To enable CodeBuild to manage the EKS cluster, complete the following steps:

- The IAM Role needs a policy to manage EKS. To do this:

Navigate to the IAM role.

Attach a policy (managed or inline) with:- Action:

eks:* - Resource: The ARN of the EKS cluster to be configured.

- Action:

- The IAM role must have Cluster Admin permissions. To do this:

Navigate to the Cluster and go to theAccesstab.

ClickCreate access entry.

Select the ARN of the CodeBuild role with typeStandard.

Click Next.

SelectAmazonEKSClusterAdminPolicywithClusterscope.

ClickAdd Policy.

ClickNext → Create.

- The IAM Role needs a policy to manage EKS. To do this:

- Access the Ansible image provided by MacStadium.

- Once all permissions are configured, you can run CodeBuild, which will install the Orka services within the cluster.

Please capture your EKS cluster nameand EKS cluster VPC regionfor use in bootstrap script needed for Configuring EC2 Macs.

OIDC Provider Setup

To use the Orka API/CLI, you need to set up the OIDC provider.

The issuer URL and client ID will be provided by MacStadium.

To set up the provider:

Go to the Cluster Access tab.

Click Associate Identity Provider.

Add the Issuer URL provided by MacStadium.

Add the Client ID provided by MacStadium.

Add cognito:groups for the Groups claim.

Add oidc: for the Groups prefix.

Exposing the Orka API Service

To use the CLI, you need access to the Orka API service, which is also utilized by some integrations (e.g., Jenkins). Please review the Orka API User Guide for more details.

We recommend using the AWS Load Balancer Controller to expose the service.

EC2 Mac Install Steps

Before deploying a Mac EC2 instance, ensure that an IAM role exists meeting the below requirements. An EKS access policy must be added to grant the role permissions in Kubernetes. Additionally, a security group that allows access to the EKS control plane from the Mac instance is required.

IAM Policies

The instance must have an IAM role with the AmazonEKSWorkerNodePolicy attached. This is required for the node to authenticate to the EKS cluster.

We also recommend the AmazonSSMManagedInstanceCore policy so the instance can be accessed by SSM for troubleshooting.

EKS Access Policy

The node IAM role must have EKS Cluster Admin permissions. This is necessary as the Mac node manages nodes and pods inside the cluster. To do this:

Navigate to the Cluster and go to the Access tab.

Click Create access entry.

Select the ARN of the Mac node IAM role with type Standard.

Click Next.

Select AmazonEKSClusterAdminPolicy with Cluster scope.

Click Add Policy.

Click Next → Create.

Security Groups

You must attach a security group to allow traffic between the managed Kubernetes control plane and the Mac node. For more information: Amazon EKS security group requirements and considerations

Provisioning Steps

- We will provide an AMI based on an official AWS macOS base image that includes our tooling (Virtual Kubelet, Orka Engine)

- The AMI will additionally include a bootstrap script that should be run via user data. See the section below for more detailed information

- The IAM role must be linked to an instance profile and attached to the instance

- The security group allowing access to the EKS control plane must be attached to the instance

- We recommend at least 500GB of storage for the host. The size depends on:

- The size of the images your are using

- The number of images you plan to have cached on the nodes

Bootstrap Script

The AMI includes a bootstrap script that can be run via user data and accepts the following parameters to connect as an Orka worker node:

- EKS cluster name, EKS cluster VPC region, Orka License Key (provided by MacStadium)

The customer should pass the following as user data when launching an instance:

#!/bin/bash

/usr/local/bin/bootstrap-orka <eks-cluster-name> <vpc-region> <orka-license-key>

This will attach the node to the EKS cluster as a self-managed node.

Using ECR for Image Storage and Management

You will need to have the following for use with ECR:

- ECR Authentication: Credentials are required to push and pull to/from private ECR repositories. For public ECR this is required only for push

- IAM Policies: The authentication token is scoped with the appropriate permissions to push and/or pull images based on IAM policies

ECR Authentication

You can get credentials for use with a private ECR registry with the aws CLI as follows:

aws ecr get-login-password --region <region>

For a public ECR registry:

aws ecr-public get-login-password --region us-east-1

You can then configure the credentials for the Orka cluster using the token:

aws ecr get-login-password --region <region> | orka3 regcred add -u AWS --password-stdin https://<aws-account-number>.dkr.ecr.<region>.amazonaws.com

Note

The token is scoped for image push / pull operations based on the IAM policies configured for the calling entity - i.e. whichever user or role calls the

aws ecr get-login-passwordcommand

Note

The token is valid for 12 hours and will need to be refreshed regularly. See: https://docs.aws.amazon.com/AmazonECR/latest/userguide/registry_auth.html#registry-auth-token

A future release will improve upon this with a credential helper.

IAM Policies for ECR

The entity that fetches the authentication token needs to have appropriate permissions to push and/or pull images.

Private Registry

For a private registry, the following policies contain the minimum set of permissions:

Public Registry

For a public registry, the following policies contain the minimum set of permissions:

- Pull: AmazonElasticContainerRegistryPublicReadOnly

- Push: AmazonElasticContainerRegistryPublicPowerUser

Mac Node Deprovisioning Steps

To deprovision a Mac Node you need to:

- Delete the Mac instance

- (Optional) Release the Mac dedicated host if you no longer need it

- Delete the Kubernetes node by running kubectl delete node <node_name> where <node_name> is the name of the node you want to deprovision

Logging, Monitoring, and Alerting

OpenTelemetry Standards

Logging and monitoring conform to OpenTelemetry best practices, meaning that metrics can be scraped from the appropriate resources via Prometheus and visualized with Grafana using Prometheus as a data source.

Logs can be exposed on EC2 Mac workers via CloudWatch or by installing a promtail service, allowing them to be aggregated through Loki.

If you prefer to use CloudWatch, you can reference AWS documentation for consuming OpenTelemetry endpoints and the agent installation guide to integrate with your existing logging system.

Key Log Sources

| What | Resource | Accessing | Purpose |

|---|---|---|---|

| Virtual Kubelet Logs | Mac EC2 Node | Via promtail /usr/local/virtual-kubelet/vk.log | Interactions between EKS and worker node for managing virtualization. |

| Orka VM Logs | Mac EC2 Node | Via promtail /Users/administrator/.local/state/virtual-kubelet/vm-logs/* | Logs pertaining to the lifecycle of a specific VM |

| Pod Logs | EKS | Kubernetes Client, Kubernetes Dashboard, Helm Chart further exposing logs to a secondary service | All Kubernetes-level behavior |

Key Metrics

A list of metrics covering the following is available: Orka API Server, Orka Operator Metrics.

Any metrics exposed by default within EC2 Mac instances can be referenced via CloudWatch. CPU utilization and network traffic are examples of metrics that are made available natively through CloudWatch.

FAQ

Does Orka Cluster support Intel nodes on AWS?

Unfortunately, Orka Cluster does not support Intel Nodes on AWS. While the 3.x version of Orka Cluster generally supports Intel, the support requires a wipe of the machine and an install of our custom-built Linux-based OS. This operation is not supported on AWS EC2 Mac.

How is macOS 15 Sequoia supported on AWS?

macOS 15 Sequoia guest OSes (VMs) will not work out of the box on AWS EC2 Mac. This is due to the newly required Apple ID guest functionality in Sequoia guest OS images which requires the host user that starts the VM to have a login keychain, even if they do not intend to use the Apple ID guest functionality. This is discussed in the Apple Virtualization documentation https://developer.apple.com/documentation/virtualization/using-icloud-with-macos-virtual-machines. Unfortunately, Marketplace security requirements do not allow the setup of any credentials on the host OS. As a result we have two options for macOS 15 support:

- After setting up your EC2 Mac, you will need to setup a login keychain on the host OS before running the Sequoia OS Orka VM image.

- MacStadium will supply a Sequoia OCI image that is upgraded from a Sonoma image rather than created from a Sequoia IPSW on Sequoia host. This will run without the Apple ID functionality in guest.

Updated about 1 month ago