Shared VM Storage

How to access shared storage from an Orka VM.

Apple Silicon-based Monterey VMs

Starting with Orka 2.4.0, shared VM storage is deprecated for Apple Silicon-based VMs running macOS Monterey. Intel-based Monterey VMs are not affected.

In Orka 3.0, shared VM storage is removed for all Apple Silicon-based Monterey VMs. Intel-based Monterey VMs will not be affected. To continue using shared VM storage with Orka 3.0 and later, you will need to upgrade your Apple Silicon-based Monterey VMs to macOS Ventura, OR switch to Intel-based Monterey VMs.

Quick Navigation

Jump to: Overview | Mounting Shared Storage | Automount Shared Storage | Limitations | Known Issues

Overview

Starting with Orka 1.6.0, all deployed Intel-based VMs will have access to a shared storage volume in the cluster. Starting with Orka 2.1.0, all deployed ARM-based VMs will have access to the same shared storage.

This storage can, for example, maintain build artifacts between stages of your CI/CD pipeline or host Xcode installers and other build dependencies.

Orka offers two different ways to utilize shared VM storage:

- By default, the VM-shared storage directory will be placed on your cluster's primary NFS storage export. This means that VM-shared storage will share storage space with VM images and ISOs, so please keep this in mind!

- Optionally, you may request to provision a secondary storage export dedicated to shared storage. This is ideal if you share vast amounts of data between your CI/CD pipeline builds.

Shared Storage in ARM-based VMs

In ARM-based VMs the shared storage will be automatically mounted and available to use. The same storage is shared between ARM-based and Intel-based VMs.

IMPORTANT

To use the shared VM storage with VMs deployed on ARM nodes, make sure to pull the new

sonoma-90gb-orka3-armimage from the remote. It contains Orka VM Tools which are required for the shared VM storage to be automounted in the VM.

IMPORTANT

Orka VM Tools 2.2.0 introduce a breaking change to the Shared VM Storage feature when used with Orka versions 2.1.0 and 2.1.1. As a workaround, make sure to use

XXXX-2.1.orkasiimages (i.e.90GBMontereySSH-2.1.orkasi) or upgrade your cluster to Orka 2.2.0.

Shared Storage in Intel-based VMs

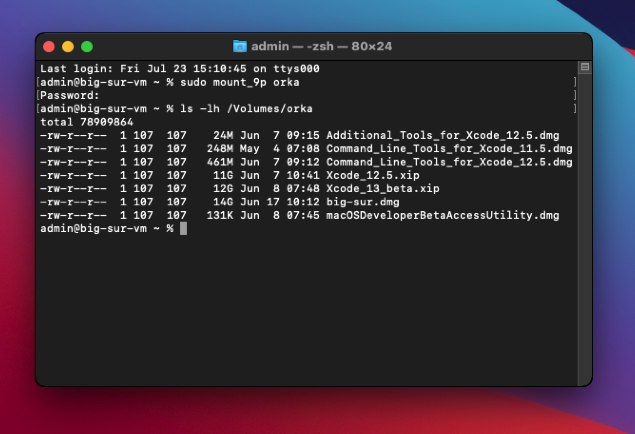

To mount the shared storage volume, run the following command from within the VM:

sudo mount_9p orka

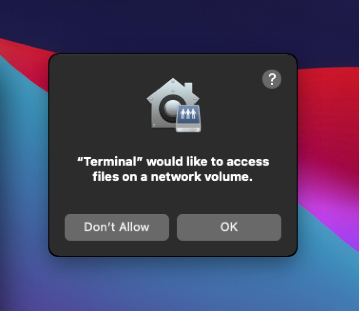

The volume will be mounted at /Volumes/orka. The first time you attempt to access the filesystem via Terminal, you will be asked to grant the Terminal application permissions to access files on a network volume:

Click the OK button to allow access. You will then be able to access files on the volume:

Automount Shared Storage in Intel-based VMs

Instead of mounting the shared storage manually after every OS restart, you can create a /Library/LaunchDaemons/com.mount9p.plist to handle automounting the shared storage.

- Connect to your VM via SSH.

ssh <macOS_user>@<VM_IP> -p <SSH_PORT>

- Make sure that

/Volumes/orkais already mounted on the VM.

ls /Volumes

- If not already mounted, mount the shared VM storage.

sudo mount_9p orka

- Navigate to

/Library/LaunchDaemonsand create acom.mount9p.plistfile.

cd /Library/LaunchDaemons

ls

sudo vim com.mount9p.plist

- Copy the following contents and paste them in Vim. Type

:wqto save and exit.

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE plist PUBLIC "-//Apple//DTD PLIST 1.0//EN" "http://www.apple.com/DTDs/PropertyList-1.0.dtd">

<plist version="1.0">

<dict>

<key>Label</key>

<string>com.mount9p.plist</string>

<key>RunAtLoad</key>

<true/>

<key>StandardErrorPath</key>

<string>/var/log/mount_9p_error.log</string>

<key>StandardOutPath</key>

<string>/var/log/mount_9p.log</string>

<key>ProgramArguments</key>

<array>

<string>/bin/bash</string>

<string>-c</string>

<string>mkdir -p /Volumes/orka && mount_9p orka</string>

</array>

</dict>

</plist>

- Change the ownership and permissions for

com.mount9p.plist.

sudo chown root:wheel /Library/LaunchDaemons/com.mount9p.plist

sudo chmod 600 /Library/LaunchDaemons/com.mount9p.plist

- Reboot the VM and save or commit the VM image.

Limitations

Reading and Writing Data

You may encounter permissions issues when reading or writing data to the shared storage volume. In order to get around this, you may need to become the root user to write data to the shared storage volume.

If you need to give a specific user read and write access to files, you can add that user to the group 107. For example, if your CI user is called machine-user create the group ci:

sudo dscl . create /Groups/ci

sudo dscl . create /Groups/ci gid 107

sudo dscl . create /Groups/ci passwd '*'

sudo dscl . create /Groups/ci GroupMembership machine-user

Confirm the above changes were made with the command dscl . read /Groups/ci. Reboot the virtual machine and save or commit the VM image to persist these changes.

IMPORTANT

Files must be given group write access to be modified by the user you have added to the

107group. For example,sudo chmod g+w myfile.txt.

Cluster Storage Limits

If you are using the default primary storage export in your cluster for shared VM storage, keep in mind that this storage is also used to host your Orka VM images and other data. This should be acceptable for sharing a limited set of files between virtual machines but is not recommended for intensive IO. In the case that you require frequent use of multiple reads and writes to the shared storage volume, setting up dedicated secondary storage is highly recommended.

Known Issues

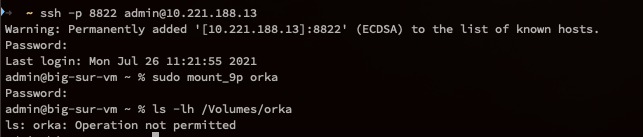

When connecting to the VM over SSH and attempting to access the shared storage volume, you may encounter the error orka: Operation not permitted:

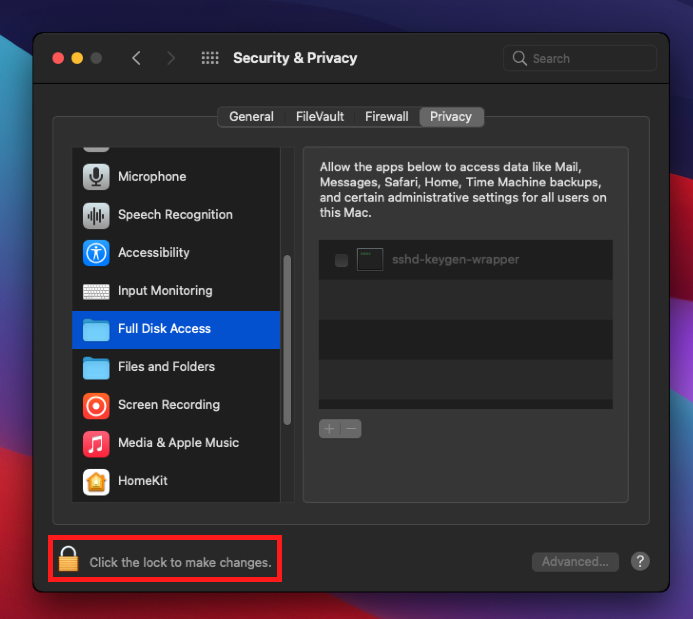

To fix this issue, connect to the VM via VNC and navigate to System Preferences → Security & Privacy and click on the Privacy tab. From the list select Full Disk Access and click the padlock in the lower lefthand corner to make changes:

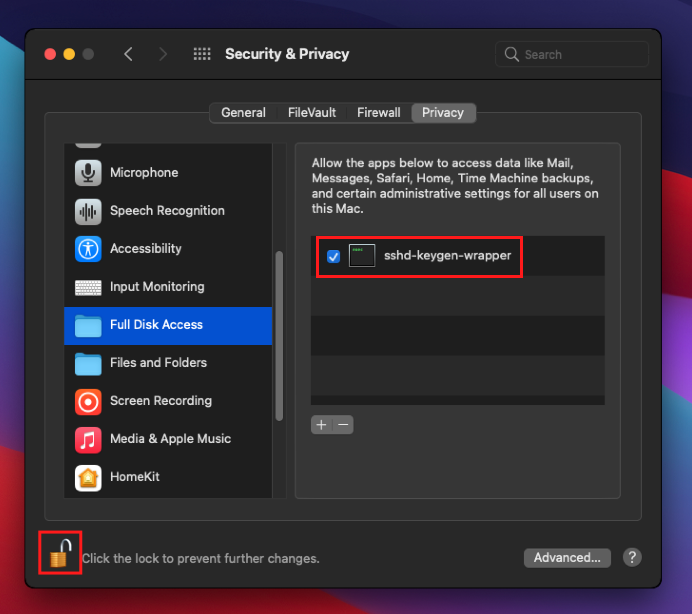

You will then be prompted to enter your password. Next, click the checkbox next to sshd-keygen-wrapper:

Click the padlock again to prevent further changes. You should now be able to access the shared storage volume over an SSH connection.

IMPORTANT

Make sure to save or commit the VM image after completing the above steps to persist changes.

Updated 7 months ago