Kubernetes Reference

Brief Kubernetes overview and workflows to help you get started with Kubernetes and Orka.

Kubernetes Design and Architecture

Overview

Kubernetes is a production-grade, open-source infrastructure for the deployment scaling, management, and composition of application containers across clusters of hosts, inspired by previous work at Google.Kubernetes is more than just a “container orchestrator.” It aims to eliminate the burden of orchestrating physical/virtual compute, network, and storage infrastructure, and enable application operators and developers to focus entirely on container-centric primitives for self-service operation. Kubernetes also provides a stable, portable foundation (a platform) for building customized workflows and higher-level automation.

Kubernetes is primarily targeted at applications composed of multiple containers. It therefore groups containers using pods and labels into tightly coupled and loosely coupled formations for easy management and discovery.

Kubernetes is a platform for deploying and managing containers. Kubernetes provides a container runtime, container orchestration, container-centric infrastructure orchestration, self-healing mechanisms such as health checking and re-scheduling, and service discovery and load balancing.

Kubernetes aspires to be an extensible, pluggable, building-block OSS platform and toolkit. Therefore, architecturally, we want Kubernetes to be built as a collection of pluggable components and layers, with the ability to use alternative schedulers, controllers, storage systems, and distribution mechanisms, and we're evolving its current code in that direction. Furthermore, we want others to be able to extend Kubernetes functionality, such as with higher-level PaaS functionality or multi-cluster layers, without modification of core Kubernetes source. Therefore, its API isn't just (or even necessarily mainly) targeted at end users, but at tool and extension developers. Its APIs are intended to serve as the foundation for an open ecosystem of tools, automation systems, and higher-level API layers. Consequently, there are no "internal" inter-component APIs. All APIs are visible and available, including the APIs used by the scheduler, the node controller, the replication-controller manager, Kubelet's API, etc. There's no glass to break --in order to handle more complex use cases, one can just access the lower-level APIs in a fully transparent, composable manner.

Goals

The project is committed to the following (aspirational)design ideals:

• Portable. Kubernetes runs everywhere --public cloud, private cloud, bare metal, laptop --with consistent behavior so that applications and tools are portable throughout the ecosystem as well as between development and production environment.

• General-purpose. Kubernetes should run all major categories of workloads to enable you to run all of your workloads on a single infrastructure, stateless and stateful, microservices and monoliths, services and batch, greenfield and legacy.

• Meet users partway. Kubernetes) doesn’t just cater to purely greenfield cloud-native applications, nor does it meet all users where they are. It focuses on deployment and management of microservices and cloud-native applications but provides some mechanisms to facilitate migration of monolithic and legacy applications.

• Flexible. Kubernetes functionality can be consumed a la carte and (in most cases) Kubernetes does not prevent you from using your own solutions in lieu of built-in functionality.

• Extensible. Kubernetes enables you to integrate it into your environment and to add the additional capabilities you need, by exposing the same interfaces used by built-in functionality.

• Automatable. Kubernetes aims to dramatically reduce the burden of manual operations. It supports both declarative control by specifying users' desired intent via its API, as well as imperative control to support higher-level orchestration and automation. The declarative approach is key to the system’s self-healing and autonomic capabilities.

• Advance the state of the art. While Kubernetes intends to support non-cloud-native applications, it also aspires to advance the cloud-native and DevOps state of the art, such as in the participation of applications in their own management. However, in doing so, we strive not to force applications to lock themselves into KubernetesAPIs, which is, for example, why we prefer configuration over convention in the downward API. Additionally, Kubernetes is not bound by the lowest common denominator of systems upon which it depends, such as container runtimes and cloud providers. An example where we pushed the envelope of what was achievable was in its IP per pod networking model.

Architecture

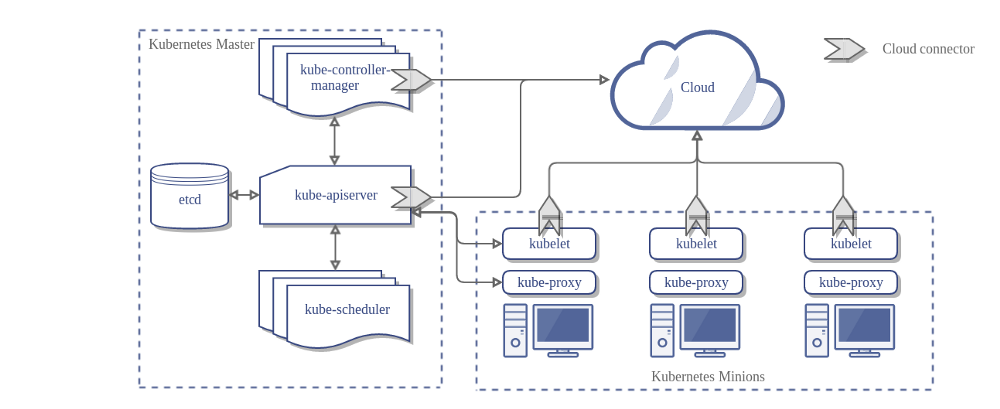

A running Kubernetes cluster contains node agents (kubelet) and a cluster control plane (AKAmaster), with cluster state backed by a distributed storage system (etcd).

Cluster control plane (AKA master)

The Kubernetes control plane is split into a set of components, which can all run on a single master node or can be replicated in order to support high-availability clusters.

Kubernetes provides a REST API supporting primarily CRUD operations on (mostly) persistent resources which serve as the hub of its control plane. Kubernetes's API provides IaaS-like container-centric primitives such as pods, Services, and Ingress, and also lifecycle APIs to support orchestration (self-healing, scaling, updates, termination) of common types of workloads, such as ReplicaSet(simple fungible/stateless app manager), deployment (orchestrates updates of stateless apps), Job (batch), CronJob (cron), DaemonSet (cluster services), and StatefulSet (stateful apps). We deliberately decoupled service naming/discovery and load balancing from application implementation, since the latter is diverse and open-ended.

Both user clients and components containing asynchronous controllers interact with the same API resources, which serve as coordination points, common intermediate representation, and shared state. Most resources contain metadata, including labels and annotations, fully elaborated desired state (spec), including default values, and observed state (status).

Controllers work continuously to drive the actual state towards the desired state, while reporting back the currently observed state for users and for other controllers.

While the controllers are level-based to maximize fault tolerance, they typically watch for changes to relevant resources in order to minimize reaction latency and redundant work. This enables decentralized and decoupled choreography-like coordination without a message bus.

API Server

The API server serves up the KubernetesAPI. It is intended to be a relatively simple server, with most/all business logic implemented in separate components or in plug-ins. It mainly processes REST operations, validates them, and updates the corresponding objects inetcd(and perhaps eventually other stores). Note that, for a number of reasons, Kubernetes deliberately does not support atomic transactions across multiple resources.

Kubernetes cannot function without this basic API machinery, which includes:

• REST semantics, watch, durability and consistency guarantees, API versioning, defaulting, and validation

• Built-in admission-control semantics, synchronous admission-control hooks, and asynchronous resource initialization

• API registration and discovery

Additionally, the API server acts as the gateway to the cluster. By definition, the API server must be accessible by clients from outside the cluster, whereas the nodes and certainly containers, may not be. Clients authenticate the API server and also use it as a bastion and proxy/tunnel to nodes and pods (and services).

Cluster state store

All persistent cluster state is stored in an instance of etcd. This provides a way to store configuration data reliably. With watch support, coordinating components can be notified very quickly of changes.

Controller-Manager Server

Most other cluster-level functions are currently performed by a separate process, called theController Manager. It performs both lifecycle functions (e.g., namespace creation and lifecycle, event garbage collection, terminated-pod garbage collection, cascading-deletion garbage collection, node garbage collection) and API business logic (e.g., scaling of pods controlled by aReplicaSet).

The application management and composition layer, providing self-healing, scaling, application lifecycle management, service discovery, routing, and service binding and provisioning.

These functions may eventually be split into separate components to make them more easily extended or replaced.

Scheduler

Kubernetes enables users to ask a cluster to run a set of containers. The scheduler component automatically chooses hosts to run those containers on. The scheduler watches for unscheduled pods and binds them to nodes via the/bindingpod subresource API, according to the availability of the requested resources, quality of service requirements, affinity and anti-affinity specifications, and other constraints. Kubernetes supports user-provided schedulers and multiple concurrent cluster schedulers, using the shared-state approach pioneered byOmega. In addition to the disadvantages of pessimistic concurrency described by the Omega paper, two-level scheduling modelsthat hide information from the upper-level schedulers need to implement all of thesame features in the lower-level scheduler as required by all upper-layer schedulers in order to ensure that their scheduling requests can be satisfied by available desired resources.

The Kubernetes Node

The Kubernetes node has the services necessary to run application containers and be managed from the master systems.

Kubelet

The most important and most prominent controller in Kubernetes is the Kubelet, which is the primary implementer of the pod and node APIs that drive the container execution layer. Without these APIs, Kubernetes would just be a CRUD-oriented REST application framework backed by a key-value store (and perhaps the API machinery will eventually be spun out as an independent project).

Kubernetes executes isolated application containers as its default, native mode of execution, as opposed to processes and traditional operating-system packages. Not only are application containers isolated from each other, but they are also isolated from the hosts on which they execute, which is critical to decoupling management of individual applications from each other and from management of the underlying cluster physical/virtual infrastructure.

Kubernetes provides pods that can host multiple containers and storage volumes as its fundamental execution primitive in order to facilitate packaging a single application per container decoupling deployment-time concerns from build-time concerns, and migration from physical/virtual machines. The pod primitive is key to glean the primary benefits of deployment on modern cloud platforms, such as Kubernetes.

API admission control may reject pods or add additional scheduling constraints to them, but Kubelet is the final arbiter of what pods can and cannot run on a given node, not the schedulers or DaemonSets.

Kubelet also currently links in the cAdvisor resource monitoring agent.

Container runtime

Each node runs a container runtime, which is responsible for downloading images and running containers.

Kubelet does not link in the base container runtime. Instead, we're defining a Container Runtime Interface to control the underlying runtime and facilitate pluggability of that layer. This decoupling is needed in order to maintain clear component boundaries, facilitate testing, and facilitate pluggability. Runtimes supported today, either upstream or by forks, include at least Docker (for Linux and Windows),rkt,cri-o, andfrakti.

Kube Proxy

The service abstraction provides a way to group pods under a common access policy (e.g., load-balanced). The implementation of this creates a virtual IP which clients can access and which is transparently proxied to the pods in a service. Each node runs akube-proxyprocess which programs iptables rules to trap access to service IPs and redirect them to the correct backends. This provides a highly-available load-balancing solution with low performance overhead by balancing client traffic from a node on that same node.

Updated about 3 years ago