Orka v2.1.1

New features, improved functionality, and bug fixes in Orka v2.1.1

Orka Release Notes

v2.1.1

August 05, 2022v2.1.0

June 27, 2022

We’re continually working to provide you with new features, tools, and plugins to improve your overall Orka experience. For the latest and greatest, be sure to update your CLI to 2.1 after your environment has been updated to 2.1.1 or 2.1.0. Here’s an overview of what changes have shipped with versions 2.1.1 and 2.1.0.

Orka v2.1.1

Improvements

- Creating a token now returns the same token until revoked. Previously, a new token was created every time the endpoint was called while the old ones weren't automatically revoked.

- Faster response times of all Orka operations due to the new way tokens are handled.

To All Orka Jenkins Plugin Users

To get full advantage of the tokens management improvements, make sure to update the Orka Jenkins plugin to the latest version.

Using Orka Jenkins plugin v1.28 or less with Orka 2.1.1+ revokes the user token on every job run. As a result, it is required to login to Orka CLI or Web UI more frequently than expected.

Bug Fixes

- Several fixes are applied for an issue where VMs sporadically are left in a Pending state forever.

- Orka response times are now consistent and don't worsen with the time.

- VM deployments now don't hang forever if there isn't enough CPU available on the node where the VM is deployed.

- ARM-based VM deployment now fails if the underlying base image is now properly downloaded on the node. Previously, it resulted in a successful deployment but the VM wasn't reachable via SSH.

- It is now possible to SSH to any ARM-based VM after the node it is deployed to has been restarted.

- It is now possible to deploy 2 ARM-based VMs on the same node without sporadically getting an error "Requested node XXX is not available.".

- It is now possible to list all VMs even when a VM is deployed with 0 RAM. Previously, it resulted in an error.

- Deploying an Intel-based VM with 2 replicas now assigns different ports to the replicas.

- Sandboxing a node now works as expected and it is not possible to deploy a VM to a sandboxed node.

- Nodes' state flapping between

ReadyandNot Readyis now improved by better management of the memory on the nodes

Orka v2.1.0

New features

Orka 2.1 introduces the following new features.

Mixed Hardware Support

With Orka 2.1, it is possible to add different hardware to a cluster. This gives the flexibility to mix different models of Mac minis with Mac Pros in the same cluster.

Setting Memory for a VM

With Orka 2.1, it is possible to specify the amount of memory to be used when deploying VMs from a VM config with such specification. This allows for better management of the memory in your cluster.

Learn more about setting Memory for a VM

Orka Logs 2.0

Orka 2.1 introduces Orka Logs 2.0 where LogQL can be used to filter and limit logs.

Learn more about Orka Logs 2.0

Shared VM Storage for VMs deployed on ARM nodes

Users are now able to use shared vm storage with their ARM-based VMs. It is accessible to all VMs in a cluster - both Intel and ARM-based ones.

Learn more about Shared VM Storage/

IMPORTANT

To use the shared VM storage with VMs deployed on ARM nodes, make sure to pull the new

90GBMontereySSH.orkasiimage from the remote. It contains Orka VM Tools which are required for the shared VM storage to be automounted in the VM.

IMPORTANT

To use a secondary shared vm storage in your environment, you will need to request that it be added to your upgrade ticket.

VM Internet Isolation for VMs deployed on ARM nodes

Similar to the VMs deployed on Intel nodes, it is now possible to forbid the Internet access for VMs deployed on ARM nodes. VM Internet isolation is part of the cluster configurations.

Learn more about VM Internet isolation and how to enable it

VM Network Isolation for VMs deployed on ARM nodes

Similar to the VMs deployed on Intel nodes, it is now possible to restrict the VMs deployed on ARM nodes. The feature allows controlling the access from one VM to another and to the Orka API.

Learn more about VM Network isolation and how to enable it

Image Copy for ARM-based (.orkasi) images

Similar to the Intel images, it is now possible to copy an ARM-based image and save it with a different name.

Learn more about copying images

Custom Port Mapping for VMs deployed on ARM nodes

Similar to the VMs deployed on Intel nodes, it is now possible to specify custom ports to be opened on VMs deployed on ARM nodes. Opening custom ports allows accessing custom services deployed on the VM.

Learn more about opening additional ports on a VM

Tekton Integration with VMs deployed on ARM nodes

With Orka 2.1, it is possible to use VMs deployed on ARM nodes for your Tekton integrations.

(New clusters only) Orka API endpoint last octet changed to .20

.20Starting with Orka 2.1, new clusters have an updated IP scheme where the Orka API is accessible on the .20 address for your Private-1 network (usually 10.221.188.20) instead of the well-known .100 address (usually 10.221.188.100). The change allows for better IP management and cluster extensibility with additional worker nodes.

Nothing changes for clusters initially deployed before Orka 2.1.

Improvements

Orka 2.1 introduces the following improvements.

Node listandnode statusnow return two separate fields for node type and and node status. Learn more about nodes- Performance improvements

- Improve the Orka API response time by fixing a memory leak

- Improve the Orka API response time by changing the way authentication tokens and logs are saved and used

node listcommand is optimised to return response 5 times faster than before. The time to return the result is now comparable to the time needed forkubectl get nodesto return

Bug fixes

- The VM status returned by

vm listandvm statusis now unified between both commands - Ensure deployment of 2 VMs simultaneously on one ARM node is successful when there are enough resources. Previously, only one of the two deployments succeeded.

- Orka CLI

- Show proper error when trying to deploy a VM to a node with required tag and such node is not available

- On

image downloadsuccessfully exit the download if Ctrl+C is used. Previously, there was a warning

- Orka Web UI

- Show correctly if GPU Passthrough is enabled in the VM configs list. Previously, the value was always

enabled - Ensure deploying a VM on an ARM node doesn't timeout

- Show correctly if GPU Passthrough is enabled in the VM configs list. Previously, the value was always

Known issues

- Any VMs deployed on ARM-based nodes prior Orka 2.1.0 might not be functional after the upgrade to Orka 2.1.0. As a workaround, make sure to delete those VMs and redeploy them.

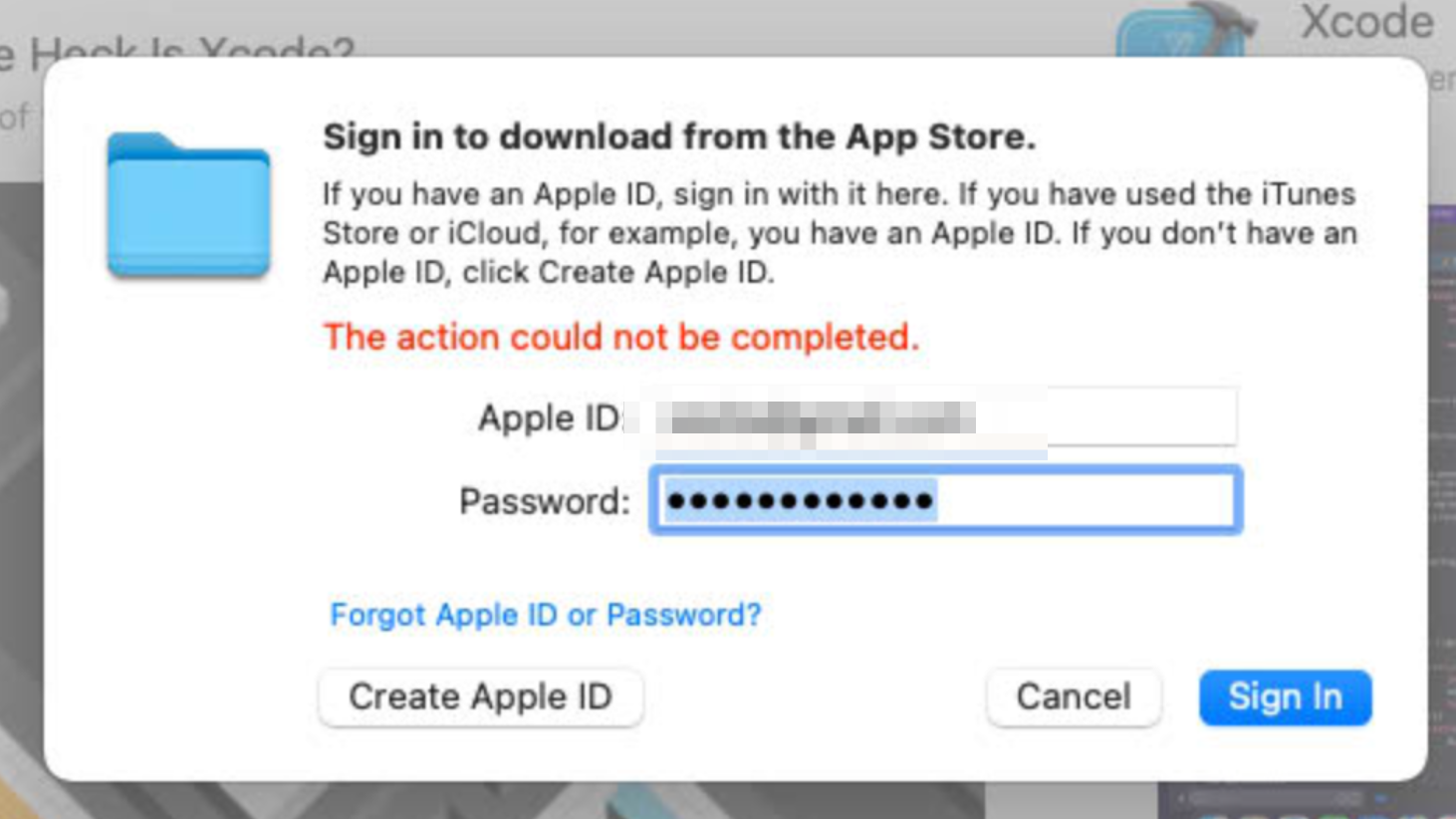

- If you deploy a VM on an Apple ARM-based node and try to login to your iCloud account you might receive an error 'The action could not be completed'. This is a limitation of the Apple Virtualization Framework. As a workaround, you can download the needed software via a web browser and install it manually.

- If your git-related operations are hanging, and nothing happens on an Orka VM, most probably, your Xcode is stuck in the "Verifying Xcode dialog" state. To ensure this is the case, you can connect to your VM via VNC, start the Xcode application and check if you will get a small window saying "Verifying Xcode".

To resolve the issue, either VNC to the VM and wait for the verification process to complete or execute the following command from the Terminal to disable Xcode verification:

xattr -d com.apple.quarantine '/Applications/Xcode.app'

- Users running older versions of Orka will start seeing Apple Silicon images when running

orka image list-remote. These images are not supported in older versions of Orka, and any attempt to pull them will result in an error. To prevent that, ignore the images or upgrade to Orka 2.1.0+.

How to upgrade

Scheduled maintenance window required

Orka 2.1.0 and 2.1.1 are new Orka releases. For more information, see Orka Upgrades.

This release requires a maintenance window of 2+ hours depending on the size of the cluster.

- Submit a ticket through the MacStadium portal.

- Suggest a time for the maintenance window that works for you.

The suggested time(s) must be Monday through Thursday, 5am to 6pm PST (8am to 9pm EST).

Updated about 3 years ago